Rise of AI Agents: What Businesses Need to Know

- Michael Plis

- Jul 22, 2025

- 14 min read

Updated: Jul 27, 2025

OpenAI’s ChatGPT AI Agent feature was introduced at a July 2025 launch event. AI agents are a new breed of AI-powered software that can take actions on your behalf, not just answer questions and it's being rolled out across the top major vendors such as OpenAI. This article will help businesses and particularly small businesses to understand the AI Agents more as they start to become available for use and to workout if they are needed and their risks.

Contents

What are AI Agents?

OpenAI’s latest “ChatGPT Agent” upgrade (powered by GPT-4o) can carry out tasks end-to-end using its own virtual computer. For example, you can ask ChatGPT to “plan and buy ingredients for breakfast” or “review my calendar and brief me on upcoming meetings” – and it will navigate websites, log in (with permission), run code, and even produce editable slides or spreadsheets summarizing results.

Other AI agent platforms and frameworks have also emerged: open-source projects like Auto-GPT, AgentGPT and BabyAGI let anyone build autonomous agents, and major companies (e.g. Google’s upcoming Gemini agentic model - at least they are indicating this is in the works & they will want to compete with Grok and ChatGPT) are jumping on board. In short, think of AI agents as digital coworkers that browse the web, schedule tasks, buy tickets, file reports, manipulate files or code, and learn from your preferences over time – far beyond what a static chatbot could do.

"AI agent is “designed to autonomously collect data from its environment, process information and take actions”

Behind the scenes, AI agents combine a large language model (LLM) with a suite of tools and APIs. As Palo Alto’s Unit42 explains, an AI agent is “designed to autonomously collect data from its environment, process information and take actions” toward your objectives. It uses the LLM to plan and reason, then calls external tools (like a web browser, code interpreter, or file manager) as needed.

Figure 1 below illustrates a typical AI agent architecture: the LLM powers planning, an execution loop calls various tools or functions, and the agent can even store short- or long-term memory to track context.

Now let's discover what they can do.

Key AI-Agent Capabilities

The new AI agents can do among other things the following type of actions:

Browse and interact with websites: Agents can navigate the web like a user, clicking links, filling forms, and scraping data. This enables them to find information, compare products, or perform online bookings.

Manage scheduling and purchases: Agents can access your calendar and make appointments or reservations. They can even add items to a shopping cart or order groceries (with your approval).

Automate complex workflows: Agents link to tools like code interpreters and spreadsheets. For example, they can analyze competitor data, run code to process it, and output charts or slide decks summarizing the results.

Create and handle files: They can generate documents, edit spreadsheets, or compile reports on the fly. You might tell an agent to “generate a slideshow on market trends” and it will do so using built-in presentation tools.

Learn and personalize: Over time, agents can remember your preferences (e.g. preferred travel options or file formats) via connected “memory” features or accounts, making them more efficient at repeat tasks.

Other future capabilities: AI Agents will definitely going to have more skills s they are upgraded and compete against each other.

These abilities hold great promise. And for the agent, skills will keep getting added particularly "in in front of the computer" type tasks, which eventually all will be doable with AI agents with minimal human supervision or human supervision. Which is what Sam Altman recently mentioned.

AI Agents may be open a whole new attack surface

But as soon as agents gained autonomy, security experts have been sounding the alarm: AI Agents may be open a whole new attack surface. So now let's analyse the cybersecurity risks that may potentially happen to AI Agents below.

Cybersecurity Risks of Autonomous AI Agents

AI agents inherit all the risks of LLMs (like data leakage or prompt injection) and add many more. Trend Micro and I caution that linking an AI agent to external systems raises fundamental security questions:

Could an LLM executing code be hijacked to run harmful commands?

Could hidden instructions in a document trick the agent into leaking your passwords or client data?

Could an AI Agent give information or money away to malicious websites or services it accesses?

Can you think of any others?

Palo Alto’s Unit42 likewise warns that agents combine language-model threats with traditional software vulnerabilities. Because agents call external tools, they can be exposed to SQL injection, remote code execution (RCE), broken access controls, and other classic flaws in the connected systems.

In practice, experts see several concrete threats:

Prompt Injection (Malicious Web Content): Perhaps the biggest worry is prompt injection. Malicious websites could hide deceptive instructions (e.g. in invisible page elements or corrupted metadata) that an agent might follow. For instance, a fake site might show a phishing form asking the agent, “Enter your credit card to proceed with this task,” hoping the helpful agent will comply. OpenAI’s own launch team called out this scenario: agents “stumble upon a malicious website that asks it to enter your credit card information… and the agent, which is trained to be helpful, might decide that’s a good idea”. The agent could unknowingly expose sensitive data or perform unintended actions. Analysts have already demonstrated prompt-injection hacks on open-source agents like Auto-GPT, forcing them to ignore their original goal and execute attacker-supplied commands.

Tool Misuse and Goal Manipulation: Attackers may trick an agent into abusing its connected tools. For example, a cleverly crafted prompt could coax an agent to run harmful code, send spam emails, or modify critical data. Unit42’s research highlights “tool misuse” as a risk: an adversary might manipulate the agent to trigger unintended API calls or to break policies inside the tools it uses. Agents that plan multi-step tasks can also be hijacked at the planning level – subtle changes to an agent’s perceived goals could redirect its actions. In other words, the agent’s plan itself can be tampered with, leading to entirely malicious workflows.

Credential Theft and Impersonation: AI agents often require login tokens or API keys to do their job (e.g. access your email or calendar). If these credentials leak (say, via prompt injection or misconfiguration), attackers could impersonate the agent or your user identity on other systems. Unit42 warns of this: “theft of agent credentials… can allow attackers to access tools, data or systems under a false identity.”. A compromised agent identity might then execute privileged actions on behalf of the user or organization. Likewise, attackers could spoof an agent’s identity to trick other systems or trick human users.

Unauthorized Code Execution and Data Exfiltration: Since agents can execute code (through built-in interpreters or APIs), an attacker who sneaks code into an agent’s context could gain full RCE in the agent’s environment. This could expose the host network, internal files, or sensitive information. Unit42 emphasizes the RCE danger: “Attackers exploit the agent’s ability to execute code… by injecting malicious code, they can gain unauthorized access to the internal network and host file system.”. In a breached scenario, the agent becomes a pivot point into your IT environment. Similarly, agents can inadvertently exfiltrate data: a hidden prompt might force the agent to email your customer database to an attacker, or to paste your payment info into a shady form.

Expanded Attack Surface (Phishing, Spam, etc.): AI agents effectively act as new endpoints on your network. They generate unusual traffic patterns (automated browsing, high-frequency form submissions, etc.) that could be exploited by attackers. Phishing could evolve into “agent-phishing,” where malicious sites target the agents themselves. Other risks include poisoned training data (if the agent uses web content to learn or fine-tune) or corrupted plugin scripts. In short, anything vulnerable on a regular computer or browser is now also a vulnerability for the agent’s “virtual machine.”

Security researchers stress that these threats are real and growing. As one analyst put it, “any company that uses an autonomous agent like Auto-GPT to accomplish a task has now unwittingly introduced a vulnerability to prompt injection attacks.”.

Trend Micro observes that AI agent vulnerabilities can directly lead to “theft of sensitive company or user data, unauthorized execution of malicious code, manipulation of AI-generated responses [and] indirect prompt injections leading to persistent exploits”.

OpenAI itself acknowledges the risk: their announcement notes that having ChatGPT act on the live web “introduces new risks” and that “successful attacks can have greater impact and pose higher risks”.

In sum, AI agents may be powerful helpers, but “AI agent fraud is real” as Stytch warns – attackers will exploit injected prompts, deepfakes and impersonation to trick or hijack these agents.

Now that we know some of the cybersecurity risks associated with AI agents let's look at some possible cybersecurity best practices as well as defenses that businesses and the AI Agent developers themselves can implement - because, yes, the onus is primarily on AI Agent developers to ensure their agents are secure and your data that you trust the AI Agents with is handled right.

Securing AI Agents: Best Practices and Defenses

Given the stakes, cybersecurity professionals and developers are already outlining security-first strategies for AI agents. The consensus is that defense-in-depth is essential – no single fix will suffice. Here are key ideas from experts and vendors for making AI agents safer:

Strong Authentication & Scoped Permissions: Never trust an agent by default.

Each agent should have only the minimum privileges needed (the principle of least privilege). For example, if an agent only needs to read your calendar, it should never have permission to send emails or access banking.

Experts recommend using modern delegation protocols (like OAuth2) to issue each agent a scoped token tied to your user account. This way, agents never store your raw passwords or API keys – they act through limited tokens. You can further impose time limits or step-up (MFA) for sensitive actions.

Stytch’s guidance specifically advises assigning “each agent a scoped token tied to a user-approved session”, so you can revoke or expire it at any time. In practice, treat the agent like a service account: explicitly authorize it for each system it should access.

Least-Privilege & Restrictive Scopes: Go further by heavily restricting what agents can do. Design agent tokens so that dangerous actions (bank transfers, personal data access) are not allowed unless absolutely necessary.

For instance, an agent with calendar:read permission should not simultaneously have email:send or bank:transfer scope.

Some suggest even limiting when and how agents can act – e.g. blocking transactions late at night or flagging large purchases for extra review.

These granular permission controls ensure that even if an agent is tricked, its ability to harm you is very limited.

In my opinion, another idea is to have a defined list of websites and services that agents can use - essentially creating a secure and trusted web experience for the agent to vet and re-vet websites and services the agent uses because sites can become insecure eg: hacked without even the owner of the site knowing.

Prompt Hardening and Input Validation: Control exactly what instructions reach the agent. Wherever possible, separate the agent’s core system prompt (how it’s instructed to behave) from any untrusted data (like web content or user input).

Apply filtering on all inputs: block known malicious patterns (like “ignore previous instructions” or embedded code).

For agents reading external content (emails, web pages, documents), consider preprocessing that content in a sandbox first to strip out embedded scripts or HTML injections.

OpenAI also builds refusal training into ChatGPT Agent – it has been trained to reject or flag obviously malicious or ambiguous prompts. Developers should similarly use content moderation or safety classifiers to screen any untrusted instructions before the agent sees them.

Secure Sandboxing: Run agents in heavily confined environments. Unit42 emphasizes that agent’s code interpreters and browsers must be sandboxed: “enforce strong sandboxing with network restrictions, syscall filtering and least-privilege container configurations”.

In practice, this means agents operate in an isolated VM or container with strict firewall rules. Even within that, limit outbound connections (only allow to whitelisted domains) and restrict file system access. The idea is to make an agent’s “computer” so locked-down that even if it executes malicious code, the damage is contained.

An idea I would like to propose is even considering whitelisting only a narrow set of trusted websites or APIs that their agents can use, and regularly re-verifying those sources for safety.

considering whitelisting only a narrow set of trusted websites or APIs that their agents can use, and regularly re-verifying those sources for safety.

Monitoring and Anomaly Detection: Treat agents as first-class citizens in your logging and monitoring systems. Log every action an agent takes (web requests, file operations, API calls) and analyze for anomalies. I would say also provide action history for the end user to view for transparency and safety. Use heuristics or AI to spot when an agent’s behavior deviates from normal patterns (for example, a surge of activity or requests to weird endpoints).

Stytch suggests fingerprinting and device-analysis: many AI agents’ traffic can be distinguished from human users. A clever defense might throttle or flag excessive automation.

Palo Alto is even developing an “AI Runtime Security” solution (Prisma AIRS) to watch network traffic from AI applications and detect threats like prompt injection or data exfiltration in real time. In other words, deploy new “AI security” tools to peer over your agent’s shoulder.

Human-in-the-Loop for Critical Actions: Even as agents automate, keep humans in the loop for sensitive tasks. Require explicit user approval (ideally multi-factor) before the agent does anything high-impact (financial transactions, changing security settings, deleting data). For instance, you might configure your agent to always pause and prompt you whenever it’s about to spend money or transfer files.

Both OpenAI and Stytch emphasize this: ChatGPT Agent is trained to “explicitly ask for your permission before taking actions with real-world consequences”, and experts advise gating high-risk operations behind an MFA or confirmation step. A simple approach is OpenAI’s “takeover mode,” where the user directly types sensitive info or credentials rather than letting the agent handle them. This way the agent never even sees your password or credit card number, dramatically reducing leakage risk.

Continuous Security Testing and Hardening: As with any software, AI agents need ongoing audits. Conduct regular red-team exercises against your agents: simulate prompt-injection attacks and try to trick the agent into misbehaving.

Integrate open-source testing tools that probe for common AI attacks (e.g. malicious prompts, malformed documents) before deploying agents. Adopt a bug bounty or formal review program for agent workflows.

Stytch recommends treating AI agents as you would critical infrastructure: “Subject them to regular and rigorous security testing; stay current on emerging threats… update your defenses accordingly.”. Maintain detailed logs for forensic analysis if something goes wrong.

In practice, many of these defenses echo traditional security wisdom adapted for AI. For example, least-privilege and careful authentication have always been best practice – now we just apply them to agents. As Stytch article concludes, “The good news is that many best practices (like least privilege, strong authentication, and vigilant monitoring) apply for both human users and AI agents – they only need to be extended to this new context”.

So let's now learn about where AI agents are in the grand scheme of AI agent development hype cycle and what the future holds for them.

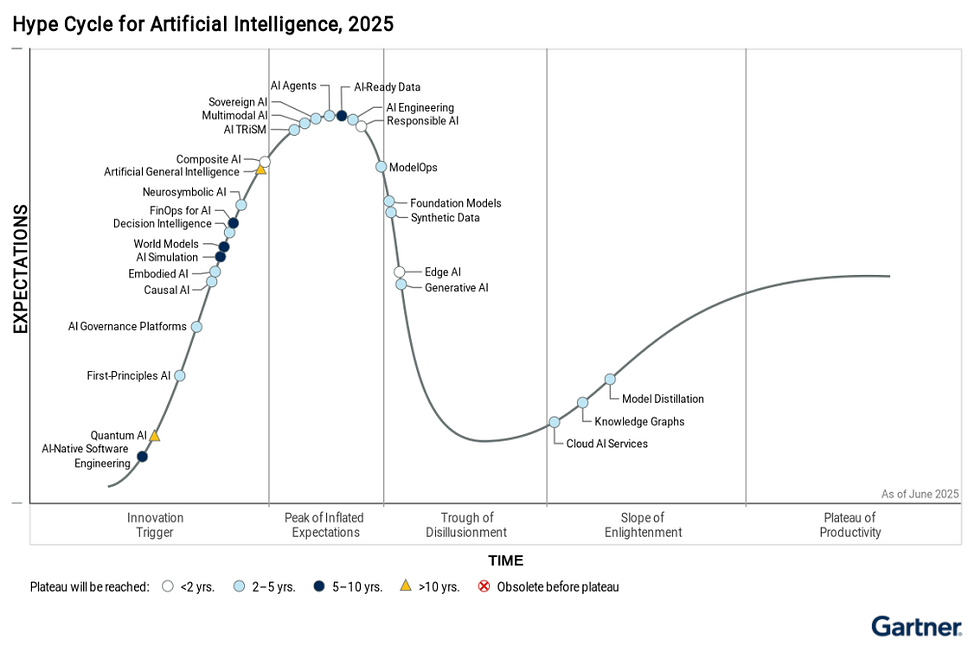

AI Agents in the Hype Cycle

In Gartner’s 2025 Hype Cycle for AI, AI Agents have surged to the “Peak of Inflated Expectations.” This means they’re riding high on media buzz and early adoption, especially with major releases like ChatGPT Agents and Google’s Gemini agentic projects. While their promise is impressive, automating complex tasks, learning user habits, and acting as digital coworkers seems a bit far fetched as the technology is still in its early phase, with many risks not yet fully addressed which we will discuss further in this blog.

Gartner predicts that AI Agents will likely dip into the “Trough of Disillusionment” next, where limitations and security gaps become more apparent. But this is a normal part of the innovation cycle. Technologies that survive this phase usually emerge stronger and more useful.

In time, AI Agents could become highly capable assistants that help with workflows, scheduling, data analysis, and even decision-making, especially in small business environments. And once the AI Agents get to "Plateu of Productivity" on the cycle they will kee getting better and better. They will most likely combine

Figure 2 "Gartners Hype Cycle for AI" below shows how technology hype cycles change over time. Gartner predicted something different just a few years ago. In my opinion the hype cycle cannot be applied the whole concept of AI as new and improved AI can be released and scaled almost like the Moore's law (Definition: Moore's Law suggests that microchip transistor counts double roughly every two years, resulting in greater computing power and lower costs. While not a scientific law, it has been a key benchmark driving progress in the semiconductor industry.). So I think there should be some sort of mapping done on how AI is advancing - perhaps a formula much like Moore's law?

Looking ahead, the future of AI Agents will depend on security, transparency, and careful integration and even more important reliability. There is still the problems inherent in AI in terms of accuracy of output.

As businesses move beyond the hype, they’ll need to prioritize trust, safety, and practical use cases. Those who approach adoption cautiously but strategically will be in a strong position to benefit when AI Agents reach the “Plateau of Productivity.”

Finally, below I discuss the possibilities of AI Agents in the future while at the same time balancing innovation and security.

Balancing Innovation and Security as AI Agents contribute to the future

AI agents are exciting – they can automate tedious tasks and boost productivity for small businesses and IT teams. But we must be realistic about the risks. OpenAI itself stresses a “caution over capability” mindset: ChatGPT Agent includes “always-on monitoring, refusal training, [and] red teaming” to minimize new risks, and users always have the power to “take over” the browser at any time. Still, as one TechRadar commentator put it, “trust is everything” – handing over an AI agent to autonomously spend your money or access accounts can feel unnerving.

In the coming months, organizations will need to decide what tasks they’re comfortable delegating to agents, and build appropriate guardrails

In the coming months, organizations will need to decide what tasks they’re comfortable delegating to agents, and build appropriate guardrails (that includes the AI developers). Will you trust your digital assistant enough to use it for online purchases or entering payment details? Or will you limit it to less-sensitive chores, like gathering publicly available data? Regardless, experts agree: design for security from day one.

As Stytch warns, agents expand your attack surface into new territory, and attackers are already adapting AI to their advantage. By thinking defensively by vetting sources, scoping permissions, enforcing human checkpoints, and monitoring behavior, we can harness AI agents’ benefits while minimizing harm.

In short, AI agents are a powerful new tool, but they must be treated with the same rigor as any other system. With layered defenses and vigilant oversight, businesses can make agents a net positive. Otherwise, an autonomous AI on your team could just as easily become an avenue for attackers.

Happy gliding online,

Michael Plis

References

Sources: Industry experts from OpenAI, Palo Alto Unit42, TechRadar, VentureBeat, Trend Micro and Stytch inform this analysis. These sources detail AI agent capabilities, risks (prompt injection, credential theft, code exploits) and recommended security practices (scoped tokens, sandboxing, monitoring, human-in-loop, etc.). Each recommendation above is grounded in cited research or vendor guidance.

OpenAI – ChatGPT Agents Announcement https://openai.com/index/introducing-chatgpt-agent/

CYBERSEC AI AGENTS CONCERNS COMMENTS: OpenAI Dev Day Live Keynote "Introduction to ChatGPT agent" (Sam Altman’s remarks near the end): 24:41 time index: https://www.youtube.com/live/1jn_RpbPbEc?feature=shared&t=1481

FULL KEYNOTE: OpenAI Dev Day Live Keynote "Introduction to ChatGPT agent" https://www.youtube.com/live/1jn_RpbPbEc?feature=shared

Gartner - Hype Cycle for Artificial Intelligence, 2025 (Paywalled) https://www.gartner.com/en/documents/6579402

OpenAI - ChatGPT Demo Video: New Agent Features https://www.youtube.com/watch?v=3wLBFyYbYcA

Palo Alto Networks Unit42 – Security Risks of LLM-Powered Agents https://unit42.paloaltonetworks.com/security-risks-of-llm-agents/

Trend Micro - Unveiling AI Agent Vulnerabilities Part I: Introduction to AI Agent Vulnerabilities https://www.trendmicro.com/vinfo/au/security/news/threat-landscape/unveiling-ai-agent-vulnerabilities-part-i-introduction-to-ai-agent-vulnerabilities

Trend Micro - Unveiling AI Agent Vulnerabilities Part II: Code Execution https://www.trendmicro.com/vinfo/au/security/news/cybercrime-and-digital-threats/unveiling-ai-agent-vulnerabilities-code-execution

Trend Micro - Unveiling AI Agent Vulnerabilities Part III: Data Exfiltration https://www.trendmicro.com/vinfo/au/security/news/threat-landscape/unveiling-ai-agent-vulnerabilities-part-iii-data-exfiltration

Stytch – AI agent security explained https://stytch.com/blog/ai-agent-security-explained/

Stytch - AI agent fraud: key attack vectors and how to defend against them https://stytch.com/blog/ai-agent-fraud/

TechRadar – ChatGPT Agent shows that there’s a whole new world of AI security threats on the way we need to worry about https://www.techradar.com/computing/artificial-intelligence/chatgpt-agent-shows-that-theres-a-whole-new-world-of-ai-security-threats-on-the-way-we-need-to-worry-about

VentureBeat - How OpenAI’s red team made ChatGPT agent into an AI fortress https://venturebeat.com/security/openais-red-team-plan-make-chatgpt-agent-an-ai-fortress/

VentureBeat - How prompt injection can hijack autonomous AI agents like Auto-GPT https://venturebeat.com/security/how-prompt-injection-can-hijack-autonomous-ai-agents-like-auto-gpt/

VentureBeat - Invisible, autonomous and hackable: The AI agent dilemma no one saw coming https://venturebeat.com/security/invisible-autonomous-and-hackable-the-ai-agent-dilemma-no-one-saw-coming/

VentureBeat - The great AI agent acceleration: Why enterprise adoption is happening faster than anyone predicted https://venturebeat.com/ai/the-great-ai-agent-acceleration-why-enterprise-adoption-is-happening-faster-than-anyone-predicted/

VentureBeat - Gartner: 2025 will see the rise of AI agents (and other top trends) https://venturebeat.com/security/gartner-2025-will-see-the-rise-of-ai-agents-and-other-top-trends/

GitHub – Auto-GPT Project https://github.com/Torantulino/Auto-GPT

AgentGPT – Autonomous AI Agent Framework https://agentgpt.reworkd.ai/

AutoGPT - Open Source AI Agent https://agpt.co/ (Wikipedia: https://en.wikipedia.org/wiki/AutoGPT )

BabyAGI – AI Agent Framework by Yohei Nakajima https://github.com/yoheinakajima/babyagi

Google DeepMind - Introducing Gemini 2.0: our new AI model for the agentic era https://blog.google/technology/google-deepmind/google-gemini-ai-update-december-2024/